Are you searching for fixing google 404 error page, how to fix 404 error on google and how to fix soft 404 errors. This relevant review will solve question on how to remove google 404 error, how to fix url problem and how to fix crawl errors 404 not found.

This post will show you amazing tools of crawl errors checker, google webmaster tools broken link checker, error 404 not found tracker plugins for word press, IP address checker, Robot.txt tester and Fetch as Google.

These best practices surely improve the Error 404 Not Found in Google Search Console and error 404 not found pages of your website.

Table of Contents

You can use CTRL + F to find the heading and read the content.

- What is a Error 404 Not Found Crawl Errors

- Increased Crawl Errors 404 Not Found Requests Means

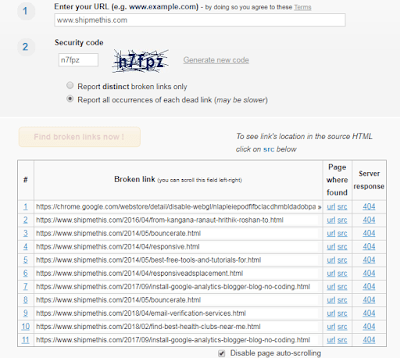

- Google Webmaster Tools Broken Link Checker

- How to Access Crawl Errors in Google Search console

- Types of Crawl Errors in Google Search Console

- Crawl Errors Checker to Track 404 Not Found URL

- Understand the 404 Non Existing Url Structure to Identify Spam

- Check IP Address to find and fix URL errors on a Google search console

- How to Remove Google 404 error using Robot.txt file

- Fetch as Google tool – Crawler Simulator

- Permalink Settings to create a custom URL structure

- Use a proper Robot.txt file

- Crawl errors checker – Robot.txt Tester in Google Search Console

- Block Unknown IP addresses to fix google crawl url 404 Not Found

- How to Identify Bad IP address and Block them

- How to Block IP address in .htaaccess To Remove Spam Crawl errors

- How to fix crawl errors in google webmaster tools Using Sitemap

- Remove URLs from the index with the Google Search Console

- How to deal with 404 errors from Facebook

What is a 404 Error Not Found

A Error 404 Not Found appears when a page from the website is moved or deleted. Google request the page to the server of the website. The server searches the local database and unable to find the page Google is asking. Server returns the request with a 404 error. This means server was unable to find the page.

Increased 404 errors Not Found means many pages in the website are moved or deleted. Google list all the pages of the website and store in the memory. Google will store the pages for long time even if you delete the page from your website. Google think that the page is still there in the website and keep asking server for page.

Increased Crawl Errors 404 Not Found Requests Means

Increased Error 404 Not Found request means that Google is having trouble in showing the listed pages in your website. This happens in few cases.

1. Large number of pages are removed or deleted.

2. A spam attack resulting in increased crawl errors.

3. Low quality spam back links.

4. Installed plugin configuration issues.

5. Malware and hacked content inside the website folders – Here is a good read on how to Fix Hacked Content Found in Google Search Console.

You will have to identify why these 404 errors are appearing and what is the cause behind it. 404 error is a client side error which means that the user who is visiting your website will get this error instead of the actual web page.

→ Crawl errors affect ranking

Users will have a bad user experience because of this. User will close the web page immediately or click back to go back to Google. This will result i increased bounce rate and ultimately loss of search ranking.

Google mentioned long back that 404 (not found) error will not hurt your website search ranking. It is not true at all. Google reduce ranking of website which gives bad user experience. Returning a 404 Not Found result code is not fine and you will have to reduce them.

It is easier to find such types of crawl errors in the new search console. I still prefer old search console for the ease of use.

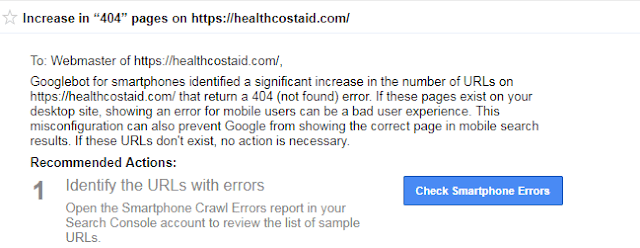

Google Webmaster Tools Broken Link Checker

How to Access Crawl Errors in Google Search console

Crawl errors can be accessed in Google Search console under Crawl section. No need to panic when you see many such Crawl Errors 404 Not Found in Google Search Console. Appreciate yourself for starting this venture to fix each of them and creating a healthy website.

You will see many 404, 500, 503, “Soft 404”, 400 errors in this section. Majorly this section is divided to two segments – Site Errors and URL errors. All the crawl errors are available in these two sections.

Site Errors in Google Search Console

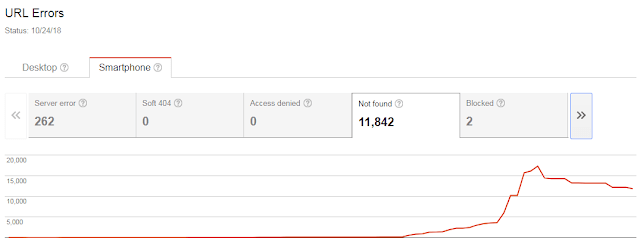

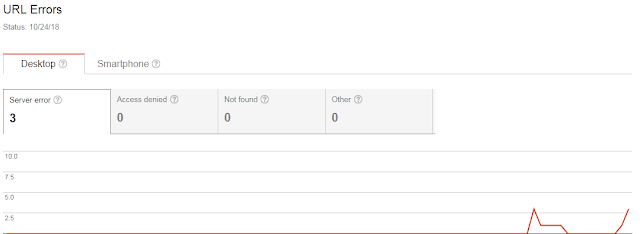

URL Errors 404 Not Found in Google Search Console

- Server error, Access denied, Not found, and Other errors are visible under under Desktop.

- Server error, Access denied, Not found, Blocked and Other errors are visible under under Smartphone.

Types of Crawl Errors in Google Search Console

Server Error HTTP 5XX Google Search Console

“Soft 404” errors

Access Denied

Not Found Errors

Blocked Errors

Other Errors

URL Parameters and Crawl Stats

Crawl Errors Checker to Track 404 Not Found URL

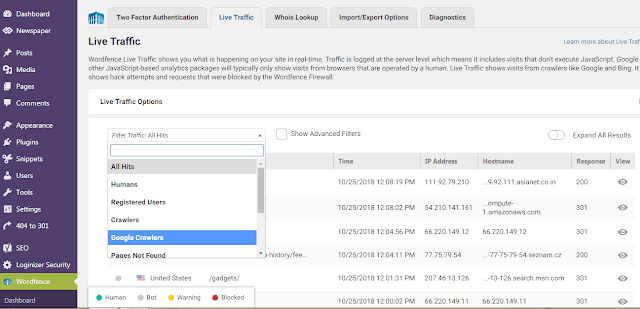

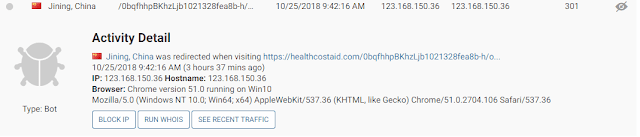

The 404 urls are appearing in Word Fence under the Tools > Live Traffic column > Google Crawlers in Word Fence plugin. These errors are reported by the GoogleBot, crawlers, and other bots.

You will see many tables here including Type, Location, Page, Visited, Time, IP Address, Hostname and Response View. Watch the Response View and compare the URL errors appearing here with the URL errors you saw in Google Search Console. If that matches, click on the 404 error to expand the view the activity detail.

Here is an example of the activity detail of Google Bot trying to access a non existent 404 page. Google Search Console will show these Crawl Errors for pages that don’t exist and this result from a targeted spam attack.

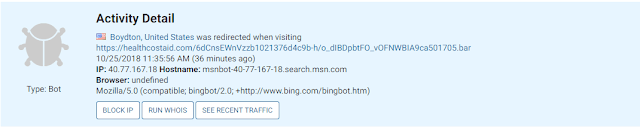

Activity Detail

United States Aliso Viejo, United States was redirected when visiting https://healthcostaid.com/eaQYwcb10213804804b-h/o_dDgRfUr099159f02.bar

10/24/2018 2:40:30 PM (5 minutes ago)

IP: 66.249.69.84 Hostname: crawl-66-249-69-84.googlebot.com

Browser: Chrome version 0.0 running on Android

Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.96 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

First you will have to check and see the structure of this URL to see if they are spam.

Understand the 404 Non Existing Url Structure to Identify Spam

How do you fix URL errors on a Google search console? by Ship Me This https://t.co/bDs1Zr4rnK— TrendTED (@TedTrend) October 29, 2018

Here are a few other spam URLs that Google is showing in Google Search Console as server errors.

http://healthcostaid.com/0ohPdcGCZSSbb10196r-i/g_mba682r-i/g_mlUho-dRGVqtjM4e335

http://healthcostaid.com/42qCcUQctTb102138d9215b-h/o_dXBGorlWtsg9dbfdd96c.bar

If they are spam, the next step to identify the source of the IP address and see if they are actually Google Bots.

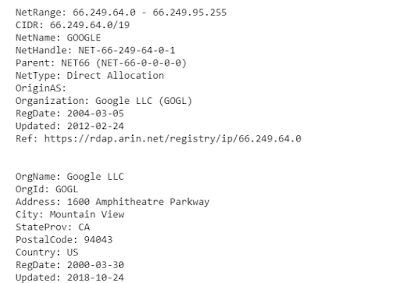

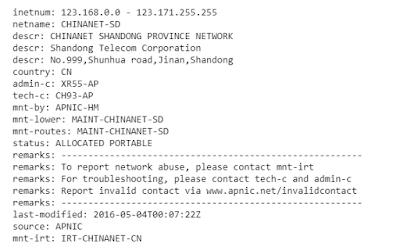

Check IP Address to find and fix URL errors on a Google search console

http://healthcostaid.com/7VlVORp-Vb10196r-i/g_m71c7er-i/g_mmiJgjJpTote6b68 from IP: 66.249.69.126

http://healthcostaid.com/1FiPKMF_-xKob10196r-i/g_m0d1ffr-i/g_mHSjWrFf-XsXwl814a1 from IP: 66.249.69.116

Use IPINFO checker to check the source of the IP. I checked the source of this IP and this is the result.

The ip is of Google bot. You should not block this IP as it belongs to Google. This means this is a server error. You will have to block the path of this 404 URL in robot.txt file to stop the crawling of these errors.

How to Remove Google 404 error using Robot.txt file

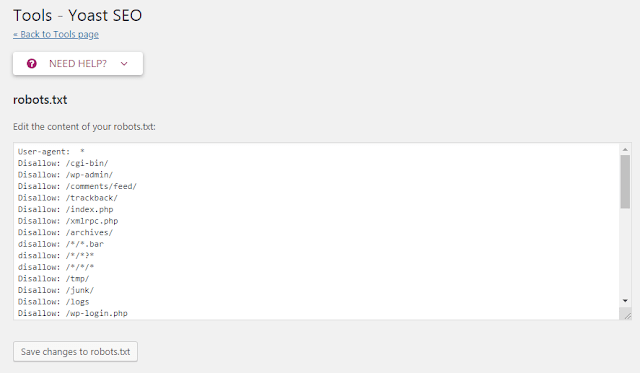

Here you can create and edit robot.txt file. Create a robot.txt file if you do not have.

You have to be very careful in editing this.

Create block paths for blocking such spam URLs.

1. http://healthcostaid.com – this is your website.

2. http://healthcostaid.com

/7VlVORp-Vb10196r-i

/g_m71c7er-i

/g_mmiJgjJpTote6b68

You can make path like this and added in the robot.txt file. I clicked Save changes to robot.txt to save the configuration.

You have double careful in editing this robot.txt file. Google may ignore search results and this may lose your rankings.

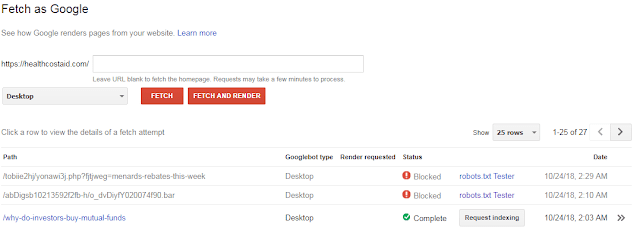

Fetch as Google tool – Crawler Simulator

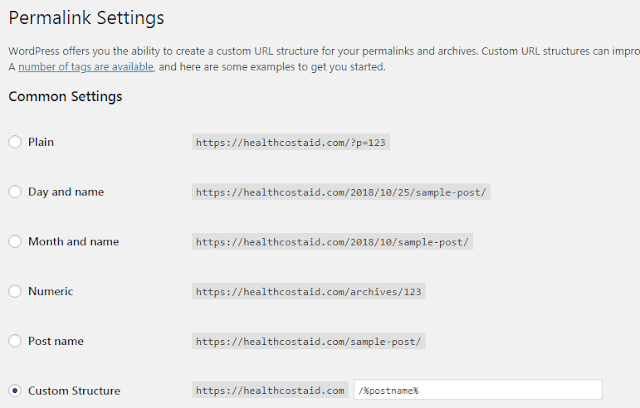

Permalink Settings to create a custom URL structure

I made sure that none of my post pages are appearing in this type of path. I went to Permalink Settings to create a custom URL structure.

I added “/%postname%” as the custom structure. This way all my posts will appear with a custom structure like this: https://healthcostaid.com/consequences-for-not-paying-medical-bills. The path of this would be :/*/. I will not block this path in robot.txt. If I add this path, Google bot will not be able to access my site.

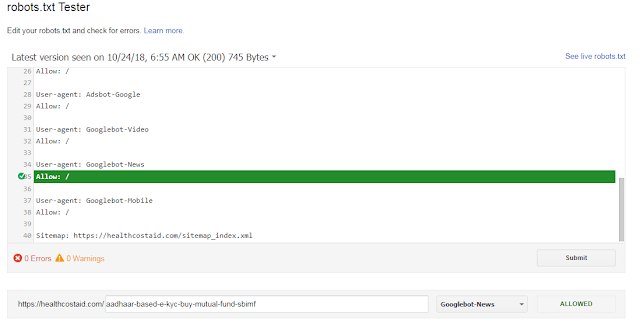

Use a proper Robot.txt file

A proper robot.txt file block the spam and junk URLs and allow other files to crawl. This will immediately reduce Crawl Errors 404 Not Found in Google Search Console. Below is a customized robot.txt file for wordpress website. Copy and it and paste in robot.txt file.

User-agent: *

User-agent: Mediapartners-Google

Allow: /

User-agent: Googlebot-Image

Allow: /

User-agent: Adsbot-Google

Allow: /

User-agent: Googlebot-Video

Allow: /

User-agent: Googlebot-News

Allow: /

User-agent: Googlebot-Mobile

Allow: /

Sitemap: https://www.shipmethis.com/sitemap.xml. Add your sitemap address here.

Google Search Console automatically add sitemap to your site. This will appear like below.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.shipmethis.com/sitemap.xml

This will allow all Google bots to access posts and pages.

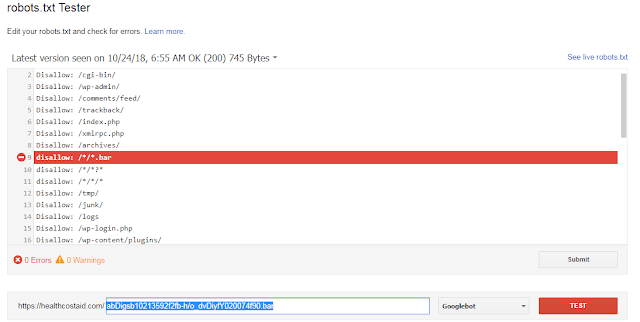

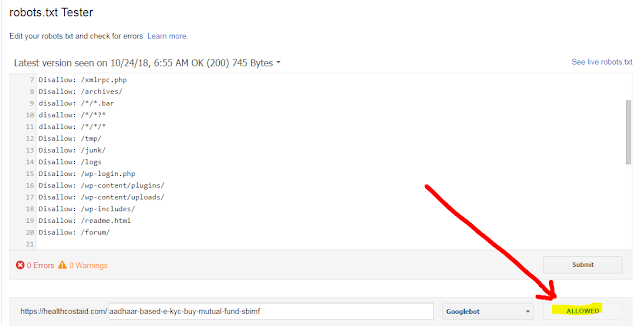

Crawl errors checker – Robot.txt Tester in Google Search Console

Robot.txt tester is an amazing tool in the Google Search Console. This tool is a crawl errors checker and allow you find whether Google bots are allowed to crawl the posts and pages. This will check for crawl errors and path issue.

Go to Crawl > robots.txt Tester to test if the spam URLs are blocked.

Check if your post URL are allowed.

Check if Google Bots are allowed.

This means you have successfully updated robot.txt file and fixed most of the Crawl Errors 404 Not Found in Google Search Console.

You have to keep track on lose of search rankings and how your results show up in Google. Be Careful while you do this. The safest way is to block spam IP addresses.

Block Unknown IP addresses to fix google crawl url 404 Not Found

How to Identify Bad IP address and Block them

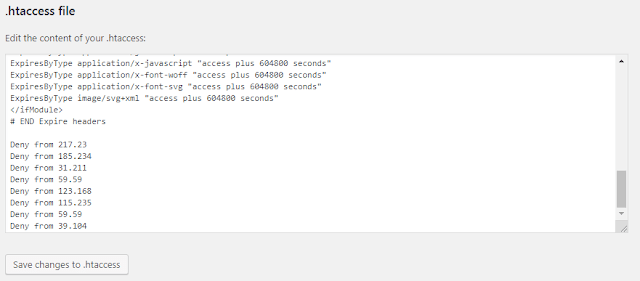

How to Block IP address in .htaaccess To Remove Spam Crawl errors

Deny from 217.23

Deny from 185.234

Deny from 31.211

Deny from 59.59

Deny from 123.168

Deny from 115.235

Deny from 59.59

Deny from 39.104

Deny from 193.169

Deny from 47.92

Deny from 114.99

Deny from 36.26

Deny from 62.210

Deny from 183.158

How to fix crawl errors in google webmaster tools Using Sitemap

It is possible that the URLs, which Google is attempting to scan, are cached or not properly updated. In case requesting Google to re-crawl your website and its URLs does not resolve this it would be best to consult with an experienced website developer as this is related to the configuration of your website.

I would advice you to generate a new sitemap for your site (you may use an online tool as this one) and to ask Google to re crawl your website URLs. You may do so as per the following article on support Google Page.

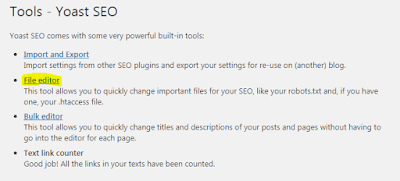

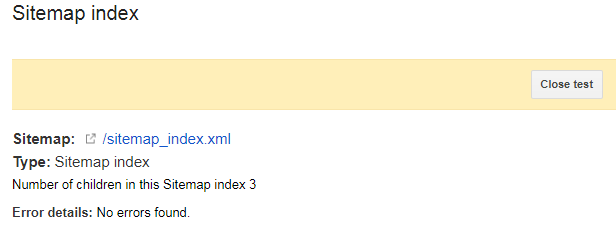

Yoast plugin automatically creates a new sitemap index. This you can access from

Sitemap: https://healthcostaid.com/sitemap_index.xml. Change healthcostaid.com to your website address. Submit this to Google under Crawl > Sitemaps > Test > Resubmit.

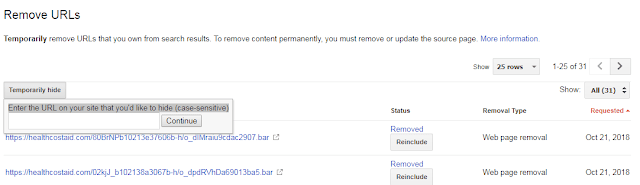

Remove URLs from the index with the Google Search Console

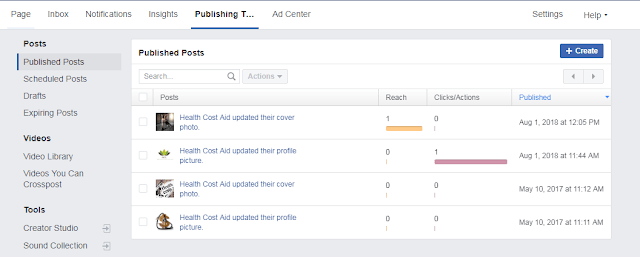

How to deal with 404 errors from Facebook

Do Share this post with other individual bloggers, website owners, social pages, groups and to those who are struggling to fix 404 crawl errors in Google Search Console. This post would be really helpful for them.